The series of six webinars “Strengthening health information networks” that had as target audience the networks of the Virtual Health Library (VHL) and Libraries of the Unified Health System (BiblioSUS) started in June this year. The first three webinars focused on bibliographic control and the role of the Network as a disseminator and intermediary of scientific knowledge in health.

The following three webinars, in turn, dealt with scholarly communication topics within the scope of the VHL information sources. The last topic, presented on October 14, refers to the Evaluation of science and impact indexes, and was presented by two professionals, Lilian Calò, Coordinator of Scientific and Institutional Communication at BIREME and Jaider Ochoa- Gutiérrez, Professor at the Inter-American School of Library Science, Universidad de Antioquia, Medellín, Colombia.

Lilian started contextualizing the need to evaluate science in order to develop public policies, promote relevant research, measure the performance of postgraduate programs and hire and promote researchers according to the merit of their research work. The impact of science, however, cannot be measured in a short time, but the impact indicators of scientific publications, usually based on citations, are processed in 2 to 3 years. There are many scientific impact indices, and the most well-known of them, the Impact Factor, inspired a series of other very similar, but competing indices, such as SCImago Journal Rank, CiteScore, and others.

The use of impact indicators is not simple, and its interpretation requires knowledge of its calculation and the database used to count citations. A fact, still, seems to have become a consensus since the first years of the 21st century, that the impact indexes based on citations were created to evaluate journals, but should not be used to evaluate researchers in hiring processes or career progression, as advocates the San Francisco Declaration on Research Assessment[1] e the Leiden Manifesto[2]. A measure of excellence of scientific journals is their indexing in thematic (for example, LILACS, MEDLINE, PubMed) or multidisciplinary databases (Web of Science, SciELO, Scopus, among others), because of their rather strict criteria for admission and permanence.

The use of impact indicators is not simple, and its interpretation requires knowledge of its calculation and the database used to count citations. A fact, still, seems to have become a consensus since the first years of the 21st century, that the impact indexes based on citations were created to evaluate journals, but should not be used to evaluate researchers in hiring processes or career progression, as advocates the San Francisco Declaration on Research Assessment[1] e the Leiden Manifesto[2]. A measure of excellence of scientific journals is their indexing in thematic (for example, LILACS, MEDLINE, PubMed) or multidisciplinary databases (Web of Science, SciELO, Scopus, among others), because of their rather strict criteria for admission and permanence.

Complementing the traditional indexes based on citations, there are also use and download measurements, which serve to assess how much articles are accessed and read, without yet being cited. Calò also mentioned the metrics based on social networks, which are gathered in the Altmetrics index.

Next, Jaider Ochoa Gutierrez presented the scenario for the evolution of Latin American and Caribbean science through responsible metrics. According to Ochoa, despite the multiple supply of metrics – usually based on citations – the assessment of science, dimensionally speaking, remains problematic. Citations, ponders Ochoa, are traditionally associated with journal articles and are unaware of other types of documents, such as books, research reports, patents, undergraduate work, etc. In addition, disciplinary differences in modes of production and assessment of knowledge production in different languages must be considered.

In proposing responsible metrics, Ochoa clarifies that this implies participating in a cooperative assessment exercise in which open science plays a key role. Moreover, criteria must be sought to measure the different forms of performance and impact – scientific, social, economic and political. Not only that, but also integrating data sources for bibliometric use and measuring the impact of different types of documents. Ochoa shows that forms of access to documents, open access routes, APC costs and many other variables that are not normally considered in bibliometric analyzes should also be considered. The importance of creating and using responsible metrics lies in the fact that research is evidenced as a state-financed asset and, therefore, it is necessary to know or estimate its impact.

In proposing responsible metrics, Ochoa clarifies that this implies participating in a cooperative assessment exercise in which open science plays a key role. Moreover, criteria must be sought to measure the different forms of performance and impact – scientific, social, economic and political. Not only that, but also integrating data sources for bibliometric use and measuring the impact of different types of documents. Ochoa shows that forms of access to documents, open access routes, APC costs and many other variables that are not normally considered in bibliometric analyzes should also be considered. The importance of creating and using responsible metrics lies in the fact that research is evidenced as a state-financed asset and, therefore, it is necessary to know or estimate its impact.

As part of the celebration of the 50th anniversary of the PAHO Latin American Center of Perinatology, Women and Reproductive Health (CLAP/WR), CLAP organized a series of five webinars. The fourth webinar, organized in partnership with BIREME, was about Infodemia: impact on knowledge production.

To discuss this important topic, CLAP composed a panel formed by Thais Forster, Knowledge Management Coordinator at CLAP; Ernesto Spinak, Consultant in IT projects, former Manager of LILACS Network at BIREME and current SciELO consultant; Lilian Calò, Scientific Communication Coordinator at BIREME; and Rodolfo G. Ponce de León, Regional Counselor for Sexual and Reproductive Health at PAHO, based at CLAP. The panel was moderated by Suzanne Serruya, Director of CLAP and Diego González, Director of BIREME, preceded by a welcome video from PAHO/WHO Assistant Director, Jarbas Barbosa da Silva.

To discuss this important topic, CLAP composed a panel formed by Thais Forster, Knowledge Management Coordinator at CLAP; Ernesto Spinak, Consultant in IT projects, former Manager of LILACS Network at BIREME and current SciELO consultant; Lilian Calò, Scientific Communication Coordinator at BIREME; and Rodolfo G. Ponce de León, Regional Counselor for Sexual and Reproductive Health at PAHO, based at CLAP. The panel was moderated by Suzanne Serruya, Director of CLAP and Diego González, Director of BIREME, preceded by a welcome video from PAHO/WHO Assistant Director, Jarbas Barbosa da Silva.

Thais Forster presented studies on infodemia and the measures undertaken by PAHO and WHO to reduce its effect on the COVID-19 pandemic through the dissemination of reliable information in their databases. In this scenario, the alliance between BIREME and CLAP allowed the creation of two products to give visibility and access to the main documents and information resources on a priority health topic, which is maternal and reproductive health. The updated version of the Perinatology Virtual Health Library, available in Spanish, Portuguese and English, and the Window of Knowledge on Women’s and newborn’s health in the context of COVID-19, also in three languages.

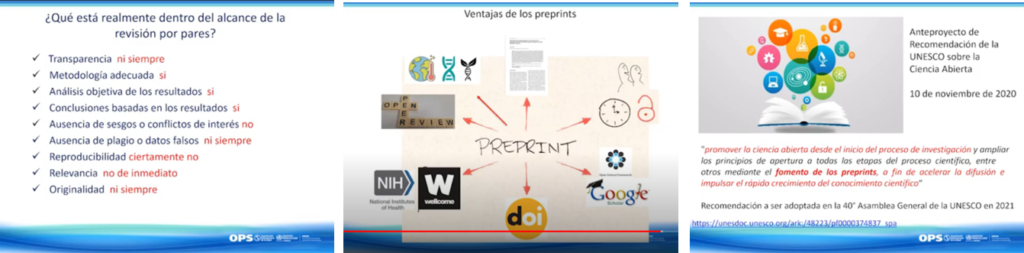

Ernesto Spinak contributed with his view on open access, bringing a brief history of the movement from the 1990s to the present day and his influence on scientific publication and the creation of the VHL, LILACS, SciELO, Redalyc, DOAJ, among other initiatives that allow the access to the full text, specialized plugins, interoperability between systems and which allow the creation of DOI, ORCID, among other forms of information and knowledge integration. Spinak highlighted the open access mandates that gave rise to Plan S and concluded his presentation with the UNESCO Recommendation on Open Access, to be approved by the General Assembly in 2021.

Ernesto Spinak contributed with his view on open access, bringing a brief history of the movement from the 1990s to the present day and his influence on scientific publication and the creation of the VHL, LILACS, SciELO, Redalyc, DOAJ, among other initiatives that allow the access to the full text, specialized plugins, interoperability between systems and which allow the creation of DOI, ORCID, among other forms of information and knowledge integration. Spinak highlighted the open access mandates that gave rise to Plan S and concluded his presentation with the UNESCO Recommendation on Open Access, to be approved by the General Assembly in 2021.

Lilian Calò presented the characteristics of the scientific information present in journal articles and the limitations of the peer review process in guaranteeing all the integrity features of the research report, contrary to the understanding that society has. The public, in general, interprets the peer review as a “seal of approval or a mechanism that has the power to protect society from informational chaos”. This misconception is potentially relevant when they reject information published as preprints, due to the fact that they have not gone through peer review, unlike journal articles.

Lilian Calò presented the characteristics of the scientific information present in journal articles and the limitations of the peer review process in guaranteeing all the integrity features of the research report, contrary to the understanding that society has. The public, in general, interprets the peer review as a “seal of approval or a mechanism that has the power to protect society from informational chaos”. This misconception is potentially relevant when they reject information published as preprints, due to the fact that they have not gone through peer review, unlike journal articles.

Discussing the advantages, disadvantages and limitations of preprints in the COVID-19 research results report, Lilian discusses how preprints (which are fast, but not peer-reviewed) and journal articles (slower, but submitted to peer review) can work in parallel as communication channels for scientific research, especially when the magnitude and impact of the pandemic certainly warrant efforts to accelerate the publication of research results, particularly those that can contribute immediately to efforts to reduce transmission, morbidity and mortality. It should be noted that, considering its relevance, the collection of preprints on COVID-19 from bioRxiv and medRxiv, and the full collection of SciELO Preprints and ARCA Fiocruz have been included as Information sources of the Virtual Health Library.

Discussing the advantages, disadvantages and limitations of preprints in the COVID-19 research results report, Lilian discusses how preprints (which are fast, but not peer-reviewed) and journal articles (slower, but submitted to peer review) can work in parallel as communication channels for scientific research, especially when the magnitude and impact of the pandemic certainly warrant efforts to accelerate the publication of research results, particularly those that can contribute immediately to efforts to reduce transmission, morbidity and mortality. It should be noted that, considering its relevance, the collection of preprints on COVID-19 from bioRxiv and medRxiv, and the full collection of SciELO Preprints and ARCA Fiocruz have been included as Information sources of the Virtual Health Library.

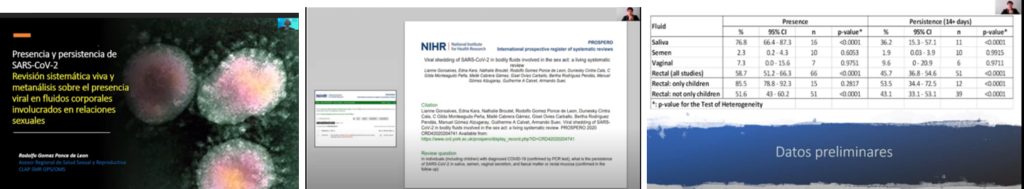

At the end of the panel, Rodolfo Ponce de León presented his work, which consists of a frequently updated systematic review, called living systematic review, on the sexual transmission of the SARS-CoV-2 virus and the preliminary results of this research.

Both the webinar organized by CLAP in celebration of its 50th anniversary, as well as the webinars of the VHL and BiblioSUS networks aimed at disseminating scholarly communication topics to different audiences than those who normally participate in workshops and courses. The more the public knows the processes of publishing and disseminating research results, the greater their power to understand and evaluate the information they receive through the media, which increasingly cites scientific sources.

Both the webinar organized by CLAP in celebration of its 50th anniversary, as well as the webinars of the VHL and BiblioSUS networks aimed at disseminating scholarly communication topics to different audiences than those who normally participate in workshops and courses. The more the public knows the processes of publishing and disseminating research results, the greater their power to understand and evaluate the information they receive through the media, which increasingly cites scientific sources.

[1] The Declaration on Research Assessment (DORA) recognizes the need to improve the ways of evaluating the results of academic research. The statement was developed in 2012 during the Annual Meeting of the American Society for Cell Biology in San Francisco and has become a global initiative covering all academic disciplines and all stakeholders, including research institutions, funding agencies, publishers, scientific societies, and researchers. https://sfdora.org/

[2] Leiden Manifesto for research metrics http://www.leidenmanifesto.org/